Key Results

- Reduced deployment risk at scale

- Faster time to resolution

- Standardized triaging workflow = improved operational efficiencies

- Reduced false positives & negatives = fewer unhandled exceptions

SDK

Javascript, Ruby, Go, Python

Solutions

Sentry Integration Platform, Release Health, Context, Issue Details, Custom Alerts

Related Content

The Sentry Integration Platform

Sentry + Javascript

Combining Custom Queries and Alert Thresholds for Faster Triaging

How A New Release Monitoring Workflow Improved Instacart’s CI/CD Canary Analysis

Headquartered in San Francisco, Instacart is the leading grocery technology company in North America. Ranked by Forbes as one of America’s best startup employers of 2022, the company partners with grocers and retailers representing more than 80% of the U.S. grocery industry to facilitate online shopping, delivery, and pickup services from more than 75k stores in the Instacart Marketplace.

When it came to a major release, Instacart’s infrastructure engineering team realized that their existing workflow for monitoring the health of hundreds of microservices was no longer sustainable. A single bug in the codebase could impact millions of customers and more than 600k shoppers… they needed a better way to detect issues in production before they impacted users.

This meant:

- Having detailed error context to speed up time to resolution

- Correlating issues with deployments for faster triaging

- Reducing false positives – and negatives – on the front-and-backend to cut out ‘background noise’ and eliminate unhandled exceptions

Not all services have the metrics to tell the story of when a bug was introduced in the code, making it hard to investigate.

Igor Dobrovitski, Infrastructure Software Engineer, Instacart

Preventing broken code from making it to productionPreventing broken code from making it to production

Instacart’s CI/CD pipeline introduces complexity when deploying new features and updates at scale, potentially impacting overall software quality. Igor’s team owns infrastructure, observability, developer productivity, and build & deploy; and to maintain velocity and quality, they combine automated canary analysis, using Kayenta, with their CI/CD pipeline. By partially deploying new releases to a small number of nodes, they’re able to analyze key metrics to assess how they perform compared to previous stable releases.

The process relies on existing metrics showing historic success/failure rates. If a new release drops below previous stable releases, they’re rolled back, giving developers time to investigate and resolve any issues.

The initial iteration of this process was essentially just software testing. The idea seemed simple, but the team knew that testing alone wouldn’t cut it, they’d need metrics showing historic success or error rates to compare past, stable releases with what they were canarying.

Tapping into an existing resourceTapping into an existing resource

Engineering teams at Instacart already use Sentry to monitor front and backend issues in Javascript, Ruby, Go, and Python. Custom tags let developers assign projects, teams, and product areas to specific issues, and correlate them with deployments for faster triaging.

Custom alerts help them stay on top of the volume and frequency of new errors, and when an error occurs, developers working on the related project are notified. While triaging issues are linked in Sentry, outlining the error, exception that happened, and its corresponding release, before being rolled back.

Wherever a ‘bad release’ was at fault, Igor’s team found that there was often a corresponding Sentry alert notifying developers of error spikes. Engineers triaging could rely on the details they got from Sentry since nearly all instances of buggy releases were already logged.

The infrastructure team realized that, since other teams had already been adding detailed context to issues and correlating them by deployment, there’d be a considerable amount of historical data to leverage for canary analysis.

Canary analysis 2.0Canary analysis 2.0

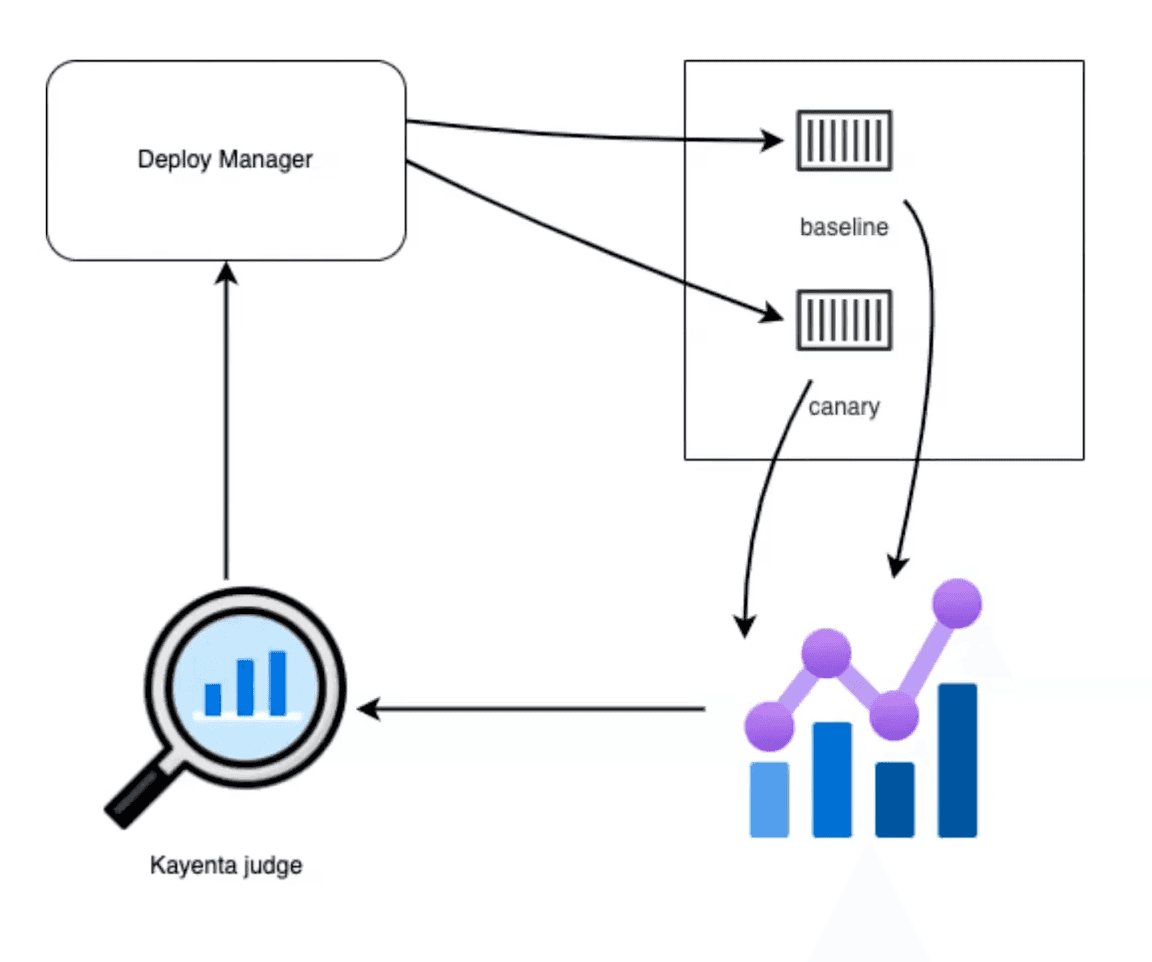

The first iteration of Instacart’s automated canary analysis has a deploy manager initiating and simultaneously deploying a stable, existing release – the ‘baseline – alongside the new release – labeled ‘canary’ – onto two sets of tasks in the cloud. Each of these is roughly 2-5% of the overall capacity of the service.

Then they start comparing the baseline and canary, with both sending metrics tagged to their respective releases to Kayenta, which reads metrics from both, processes them, and determines any statistical irregularities to identify potential degradation in the canary. Seems like a simple system, right? It is, but only if it has reliable metrics to go on.

Canary analysis before Sentry integration

Canary analysis before Sentry integration

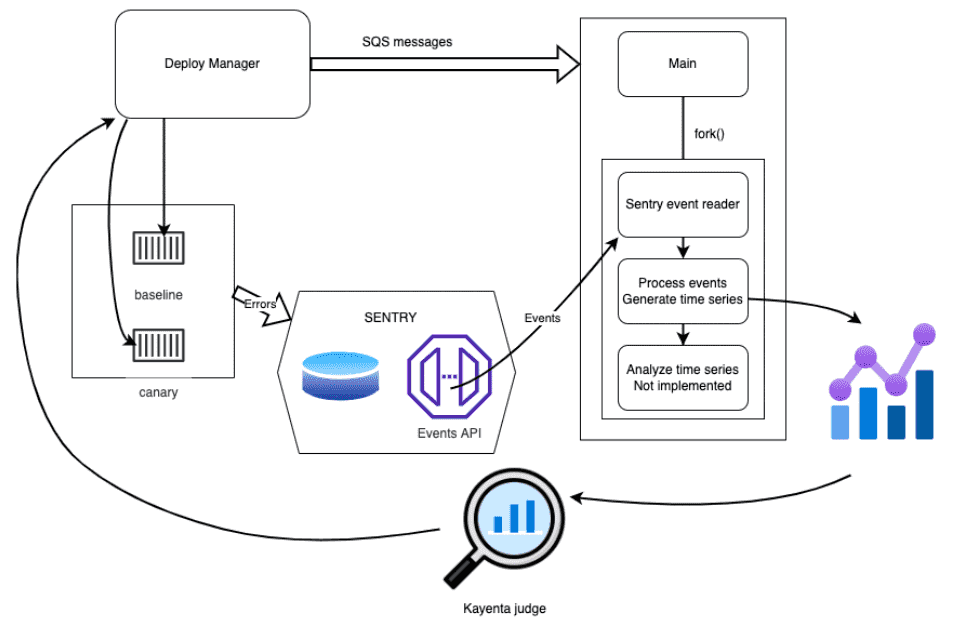

Igor’s team then started looking at Sentry to extract more detailed data to add to the automated analysis. The deploy manager still deploys baseline and canary, but also sends an SQS message to a custom service, which kicks off a new Sentry integration that runs for the test duration. This pulls from Sentry’s V2 API with the service name being tested and reads all the errors that are generated back to it in real-time, before splitting them into what’s coming from baseline and what originated in canary, disregarding everything else.

Canary analysis after Sentry integration

Canary analysis after Sentry integration

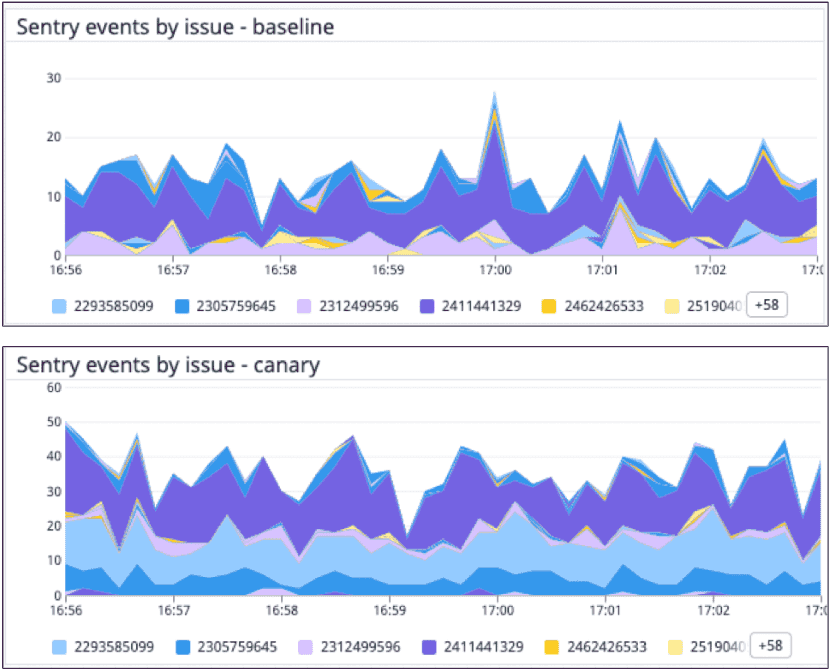

The process leaves about 2% of the overall traffic attributed to baseline and canary respectively. They then add all of the exceptions together and have a running count every 10 seconds that monitors whether the canary is throwing out more exceptions than the baseline.

Exceptions are exported as data points to Kayenta, which then rolls back the deployment if the canary is returning more exceptions than the baseline. Using Sentry’s event APIs specifically meant that they only had to query errors relevant to that specific canary release, automatically disregarding the rest.

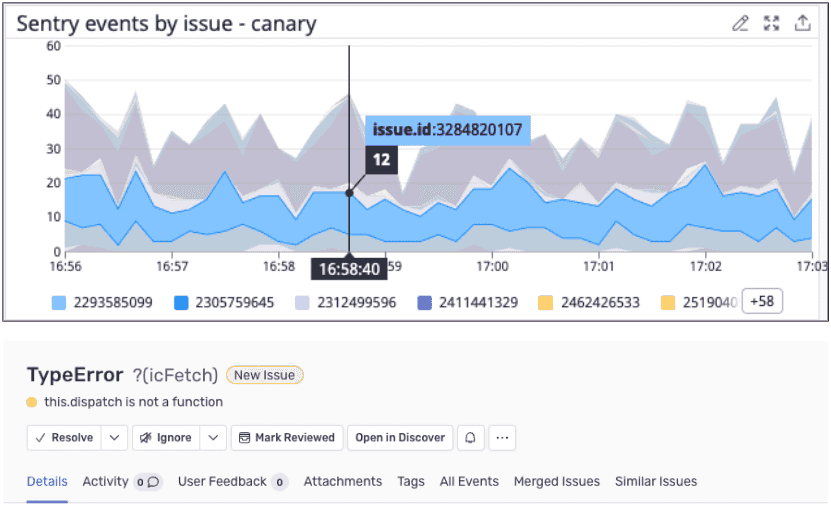

Speeding up time to resolutionSpeeding up time to resolution

Integrating source maps with their CI pipeline, developers triaging get additional source code context obtained from stack traces. Issue IDs show the timeline of actions that led up to any errors combined with existing context, ultimately speeding up time to resolution while reducing deployment risk.

Since integrating Sentry into their new canarying workflow at the start of 2022, Instacart’s infrastructure team has been able to detect and resolve 81 of 129 ‘buggy releases’. Attaching context and issue details from past releases reduced the number of false positives and negatives and improved overall accuracy, reducing background noise and leading to fewer ignored exceptions. This more robust, standardized workflow combined with actionable details helps developers prioritize and resolve issues that could otherwise impact millions of users.

The ‘signal’ we get from Sentry is the most reliable indicator of software issues and is used throughout Instacart because it can be easily configured for each service regardless of the language or framework.